When Algorithms Curate Reality

3/16/2025

Today, I want to discuss the impact of language models on search results. I’ll start by discussing classic results from engines like Google, Yahoo, and DuckDuckGo. Then, we will look into the role of language models on synthetic results. Finally, we will look deeper into the core aspects of the problem and consider important skills for using the internet as an information source.

Classic Search Engine Results

Some people are afraid that AI-generated information from multiple websites, which are initially ranked by search engines, could reduce the relevance of their queries over time. They occasionally contain inaccurate or downright false information, and they claim that is why these models were destroyed on the internet. However, that problem existed before the public success of language models. Since the beginning of the internet, the visibility of websites has been an essential factor in gaining money, and search engines are the typical channel to come to a certain website. So, different providers try to optimize the websites that the search engines will present them in front of.

This strategy is known as search engine optimization (SEO). Such SEO-optimized websites provide their content to be placed first in search queries and do not necessarily provide valuable content for the user. The user visits their websites from the search engine, and they earn money from the advertisements. They have no interest in examining the facts because it is expensive, and they do not get much money from the marketing. Typically, it is difficult for users to recognize misinformation because they search for new and unknown information. And, with the rise of new language models, generating content has become much easier. These models generate SEO content for websites to rank high in search engine results rather than the content itself. So, it is a problem when companies start writing problematic content only for advertisement money, and the users believe them. Furthermore, many SEO-optimized websites tend to simplify issues to provide output that is acceptable to the user but not always exact enough. This behavior also increases the likelihood that they will receive positive feedback because search engines determine the success of a website when you do not attempt to look more into the topic. So if users go to a website and find information, they typically use the next search term. This observation is an indicator that the search was successful. If the user goes back and looks at a second site, the search engine algorithm interprets that as the necessary information unavailable. However, this may be intentional, especially in ambiguous content, because users read the first website and try to add arguments from a second source to their understanding of the problem. As a result of this simplicity, search engines frequently rank unambiguous content higher.

What could we do to solve this kind of problem? As a user, it is also a good situation because, in the past, the SEO content was written by users, so it was not easy to distinguish. Nowadays, if you know about these systems, their output is more straightforward to recognize. These providers of SEO spam websites are not interested in providing good language. So, they often use the plain text from these models. So that is to say, in this negative scenario, it is also beneficial. And in the end, as a user, you also have to get a bit more information about the website to build trust. You should typically use articles, as they provide a sense of background information, and you should check if specific sources are mentioned to ensure you get the correct information behind them. This missing connection is a typical problem, especially if they generate the content without providing background information to the model. In such cases, the information might be misleading. And lastly, you can use search engines with a smaller market share as a user, such as DuckDuckGo. SEO websites are optimized for a specific search engine. Typically, they optimize for Google as the market leader. Using DuckDuckGo, for example, makes you less vulnerable to such manipulations.

As a website provider, you may also use generated content. If you are interested in that, try to curate it and verify whether the content is really correct, using the generation as a first attempt before validating and improving the content. Oftentimes, these sites have no interest because they want to make fast money, and expertise in certain areas is expensive. However, such providers cannot build a valuable reputation in the long run. Additionally, search engines reduce the visibility of websites if they use black hat SEO strategies. Google considered generated websites without adding value for users as spam.

Synthesized Search Engine Results

Apart from that, synthesized results are also beginning to become more relevant. Users sometimes get an overview result that collects information beforehand. Users can use large language models like ChatGPT, activate the search functionality, and search for specific information. That’s a new, emerging form of search engine. The idea is that users do not have to click through multiple sites and get one custom user experience. These systems are interesting, especially if you want better accessibility, which is often a problem with websites because they have multiple developers. If Language Models incorporate their content, they provide a more accessible experience. Also, with new personal assistance with text-to-speech and speech-to-text systems, it becomes possible for non-technical or less-educated users in that field to gain access. If you can talk to a person, you can also speak to such a system and get information from it. More customized answers are another benefit. Users often have a concrete problem and search for a solution. They want the exact solution to their problem. Instead, websites usually provide various solutions for similar but not identical issues. However, these models can generalize to a certain degree and may solve the problem even if any website does not directly provide it.

In that area, there are also some problems. Initially, there is a tendency for these models to hallucinate. Ultimately, they always provide a particular output for a specific input, and they are not guaranteed to provide correct information. It is also hard to describe what knowledge is in the neural network and how one can access it. As a countermeasure, retrieval-augmented generation (RAG) and finetuning can improve the results. Developers can incorporate certain websites related to the topic as context to improve the outcome. Also, they can finetune these language models for search tasks to reduce hallucinations to a certain degree. Budakoglu et al. showed their influence on different datasets like the Stanford Question Answering Dataset. However, these methods are not entirely error-proof. Xu. et al. showed that ChatGPT’s use improves the capability to answer general solutions compared to Google’s. However, their ability to check facts was reduced. However, the research in that area is unclear. Fernández-Pichel et al. conducted a study that showed that users could find health information more reliable with RAG. However, it also illustrates the problem of LLMs hallucinating certain information.

Another aspect is that language models modify these websites more sophisticatedly. There is a tendency for the results to be biased, which could be intended or unintended. These models are trained on a large amount of data. These texts may be biased with racist or gender stereotypes that the developers are not aware of. The results could also be intended biases to influence their users’ political or economic decisions. Furthermore, there is a problem of explainability. Explaining why this text appears is not easy for many language models. There is no easy way to determine which part of the prior training set ultimately influenced this result. RAG can reduce this problem to a certain. Users can check the used context, and the system can provide it. However, these attempts are also limited. Chung-En et al. showed an approach to create better interpretable LLMs. However, this research area is currently in an early stage. Finally, larger language models have a higher energy consumption than these classic algorithmic approaches, which is also a problem for the climate with growing adoption.

Here, you can consider different strategies to improve the current situation. First of all, it’s important to define certain quality standards. Sachin et al. describe the entire development process, from data collection and model training to the deployment of the application itself, to avoid harm from LLMs. I recommend using Open Source AI models. You can examine the training data of these models to get an idea of what information might be included in the Language Model. Third parties can also not easily include biases to manipulate your decisions. Mistral Small 3 and TinyLlama are examples for such models. it is also senseful to use smaller, locally runnable models. While their quality might not be completely equivalent, they are oftentimes good enough, and you can reduce the energy required. Ollama allows you to run models on the terminal, and Open WebUI provides you with a webpage that runs on your local computer. It looks similar to ChatGPT, and you can provide documents or even activate web searching there.

Old Problem, New Relevance

Since the beginning of the internet, there has been a change in trust. Previously, you had certain publishers with a good reputation who acted as gatekeepers for the content provided in books. With the rise of the internet, everybody can share content on websites, so there is no longer a double-checking system. However, many people do not realize this change. Many people have transferred their trust from books to websites. Nowadays, it is not considered valid for many if they cannot find certain information on Google. Or, if they see different information, they might think that the person who provided it is automatically wrong. This shift in trust began much earlier and will only be further stressed by these large language models because now it is easier to publish new content since a person does not need to write the text manually. There are also some more fundamental problems we have to discuss.

Further support for critical thinking would be necessary. People should always ask, “What is the source behind that?” Often, articles do not have a concrete citation where the information comes from, and that is a big problem. However, that concept is also limited. Previously, some major publishers were known to be trustworthy. Nowadays, many different and changing actors exist, so it is not always easy to validate a reliable source.

Additionally, more training in analytical thinking would be important. I think you should question the assumptions in texts: Do I have specific domain knowledge that allows me to trust these assumptions? Or might they introduce some strange assumptions about certain topics that I believe? Also, consider deconstructing arguments: Are they logically coherent, or are there mistakes or odd logical conclusions?

In that context, language models could also be helpful as a sort of debate partner because I could challenge them just as I would challenge a human. If someone writes text, I could ask, “Is that true? What do you think about that?” It is an interesting possibility to have a language model to discuss with. Of course, you must remember that the knowledge is limited to specific topics and might be censored in certain areas or a bit biased, but at least it is a reasonable attempt to train critical thinking. Of course, you should also rely on humans for that. Sometimes, finding someone who wants to discuss a specific topic with you is not easy.

Additionally, the problem nowadays is that time is really limited, and we have moved toward an attention economy. If I produce a new text, I get attention. However, it is not as valued if I do more validation or try to improve the content. Google search uses freshness as critical factor for their ranking. That is why we get a lot of new content but not much quality content over time. It would be good to invest more effort to support quality content.

Also, paying more for the content we read and use would be a good idea because otherwise, it is not easy for content creators to perform this verification. For example, if you let ChatGPT quickly write a text, it does not require much time and attention, but if you do proper validation, you need more time to improve the quality iteratively, and these changes are not always visible.

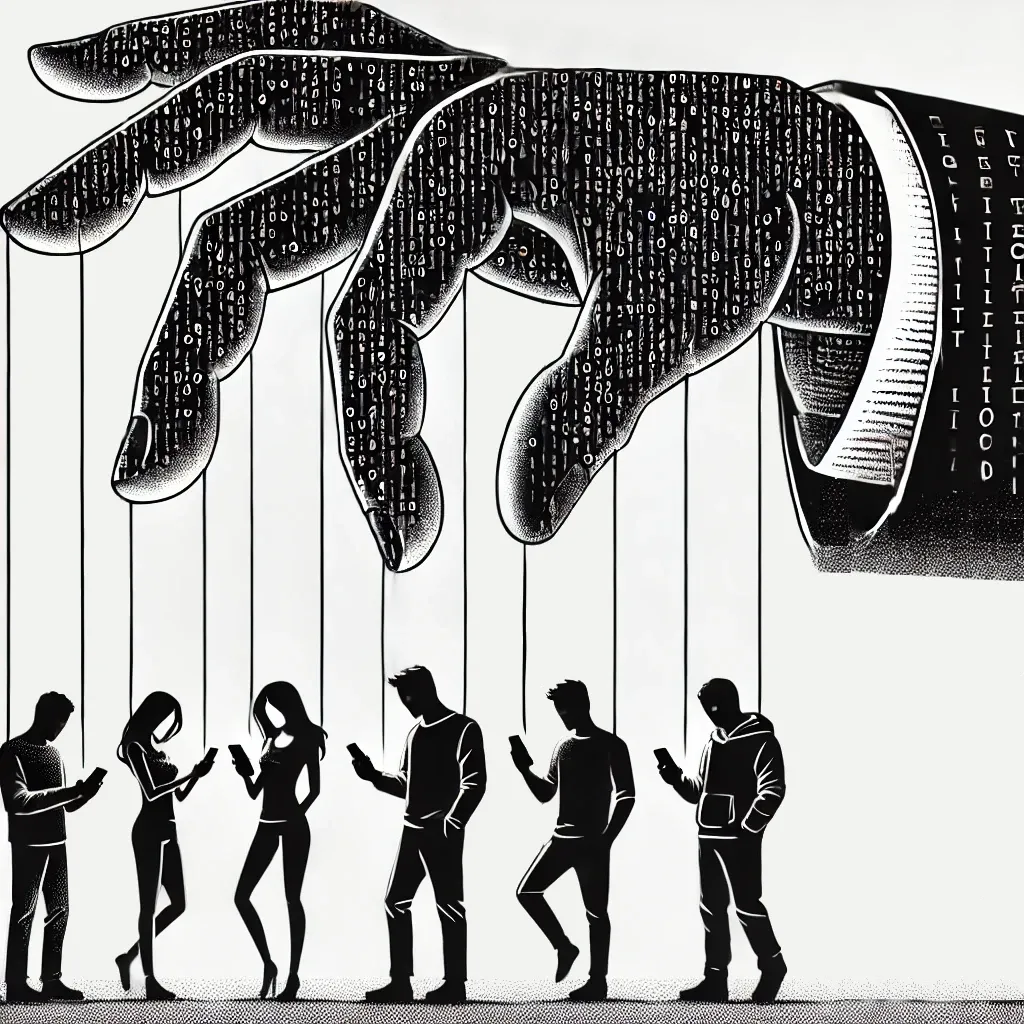

Finally, we should also get more information about the algorithm that decides what we see. In the end, these algorithms have a lot of power because we read this information and build our worldview around it. The economic interests of Google as an advertisement platform are not always aligned with our social interests, so they have no interest in sharing these algorithms. These algorithms should be given more legal force to be published, and it should be possible for users to decide which information they want to have about a certain topic.

Currently, as a user, you can design your information channels. For example, you can use RSS Reader to get news from selected trustworthy sites. You can also use apps like FreeTube or Newpipe to get specific YouTube videos based on your decisions instead of the ones recommended. Especially if you want new information, it is not easy to avoid these search engines altogether because building your own search engine is a huge challenge. In that context, it is senseful to use more socially reliable search engines like DuckDuckGo. However, you still face the problem of being somewhat dependent on these providers. Complex decisions are never possible without trust.